-

Notifications

You must be signed in to change notification settings - Fork 1.3k

Add podGroup completed phase #2667

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

9d31199 to

3a6c9e4

Compare

Signed-off-by: Gaizhi <[email protected]>

Signed-off-by: shaoqiu <[email protected]>

3a6c9e4 to

cf17b60

Compare

| pg.queue.Add(req) | ||

| } | ||

|

|

||

| func (pg *pgcontroller) addReplicaSet(obj interface{}) { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@waiterQ As far as I know, when replicaset was created, it would always be 0 replica. And after creating, the replicaset would scale up to defined replica numbers. So why deleting podgroup on both addReplicaSet and updateReplicaSet, but not only updateReplicaSet?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes, you're right. In normal process with one version, volcano just need updateReplicaSet, and if consider the situation upgrade from a version to another, there isn't addReplicaSet help to cleanup stock podgroups in cluster. addReplicaSet is work with already-exist podgroups, addReplicaSet work with upcoming podgroups.

| return | ||

| } | ||

|

|

||

| if *rs.Spec.Replicas == 0 { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There is a probability that there will be two replicasets with none zero replicas when doing roll upgrade which means two pg exists, does this matter?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think this is about deployment's rollingUpdate strategy, in pod rolling creating, its definitely 2 kind pods exists. I think it's normal, not a problem.

|

Please add the test results on the PR, thanks. |

ok, done. |

william-wang

left a comment

william-wang

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

/lgtm

| @@ -0,0 +1,189 @@ | |||

| /* | |||

| Copyright 2021 The Volcano Authors. | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the Copyright is not correct.

| Expect(len(pgs.Items)).To(Equal(1), "only one podGroup should be exists") | ||

| }) | ||

|

|

||

| It("k8s Job", func() { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please use a formal and complete description for func

| fmt.Sprintf("expectPod %d, q1ScheduledPod %d, q2ScheduledPod %d", expectPod, q1ScheduledPod, q2ScheduledPod)) | ||

| }) | ||

|

|

||

| It("changeable Deployment's PodGroup", func() { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please give a formal and complete description for func

| @@ -0,0 +1,189 @@ | |||

| /* | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it is a util package, why name the file name as deployment.go

|

[APPROVALNOTIFIER] This PR is APPROVED This pull-request has been approved by: william-wang The full list of commands accepted by this bot can be found here. The pull request process is described here DetailsNeeds approval from an approver in each of these files:

Approvers can indicate their approval by writing |

| if int32(allocated) >= jobInfo.PodGroup.Spec.MinMember { | ||

| status.Phase = scheduling.PodGroupRunning | ||

| // If all allocated tasks is succeeded, it's completed | ||

| if len(jobInfo.TaskStatusIndex[api.Succeeded]) == allocated { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

for batchv1 native job, if using .spec.completions and .spec.parallelism in job, for case, successed 10, in the same time, the queue is full, other 10 pod will pending, len(jobInfo.TaskStatusIndex[api.Succeeded]) == allocated will be true, job not finished but pg status is completed, would it happen?

Modification Motivation

Volcano can schedule normal pods, but it shows up podGroup inqueue problem at some conditions. scheduling k8s-job,

podGroup is still at running phase when pod went to completed phase; changing deployment's requeuest will cause old podGroup in phase Inqueue.

So I pick and fix #2589 (Feature/add replicaset gc pg), and add phase Completed for normal pod podGroup to enhances podGroup.

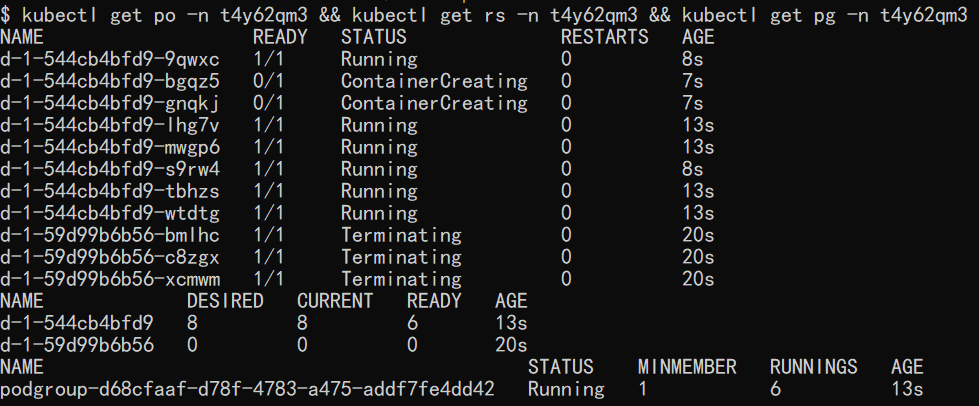

Test Result

when deployment roll-updating, there're two podgroups exists.

when deployment roll-update is over, one podgroup left.

when k8s job is completed

podgroup is completed

ci e2e result