-

Notifications

You must be signed in to change notification settings - Fork 26.3k

Update documentation for CTCLoss #18415

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

torch/nn/modules/loss.py

Outdated

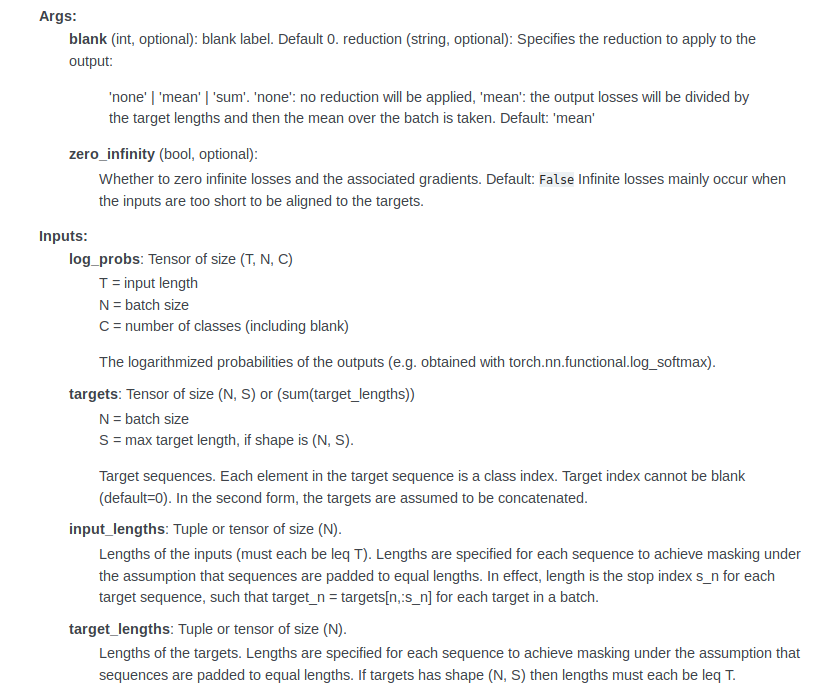

| targets: Tensor of size :math:`(N, S)` or `(sum(target_lengths))`. | ||

| Targets (cannot be blank). In the second form, the targets are assumed to be concatenated. | ||

| targets: Tensor of size :math:`(N, S)` or `(sum(target_lengths))`, where `N = batch size`, and | ||

| `S = maximum target length`. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What's the effect of changing the indent here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Did you mean on line 1238?

The indent means that the remaining words in that sentence will belong to the header block when rendered. I noticed that some of the other docs don't do this, so I have actually changed it again to something else.

torch/nn/modules/loss.py

Outdated

| such that :math: `target_n = targets[n,:s_n]` for each target in a batch. | ||

| target_lengths: Tuple or tensor of size :math:`(N)`. | ||

| Lengths of the targets | ||

| Lengths of the targets (may be :math: < S) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Maybe should be <= S if targets is a two-dimensional tensor (but with :math:) would be clearer.

torch/nn/modules/loss.py

Outdated

| >>> | ||

| >>> min_target_length = 10 | ||

| >>> max_target_length = S | ||

| >>> |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

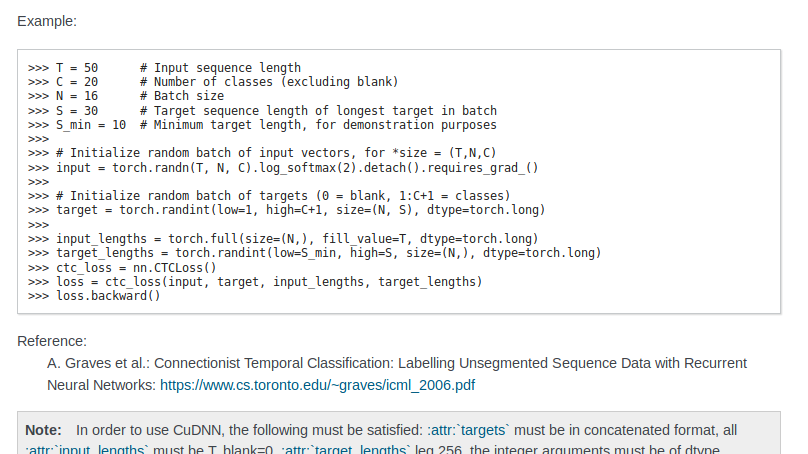

I must admit that the blank lines are just too much. Also, I wonder, if we need to introduce a second name for S.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I redid the naming it in the latest update. Also removed some of the self explanatory comments, and removed some spaces.

|

Thank you for your patch! It will be nice to have the improved documentation. |

torch/nn/modules/loss.py

Outdated

| targets: Tensor of size :math:`(N, S)` or `(sum(target_lengths))`, where `N = batch size`, and | ||

| `S = maximum target length`. | ||

| Each element in the target sequence is a class index. Target index cannot be blank (default=0). | ||

| In the second form, the targets are assumed to be concatenated. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Are the targets concatenated with or without the padding to make it a fixed (N, S)?

Maybe it would be useful to explicitly comment on that.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think the answer is "with padding". I actually still don't understand how the (sum(target_lengths)) form works... it doesn't seem like that actually describes a shape. Can @t-vi comment on this?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That's a 1-d shape. (sum(target_lengths),) would probably be more pythonesque.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah no I see now. Not sure why I was confused before. The comma is probably not necessary.

|

Ok I made some changes, hopefully for the better. Since you asked about rendering, I didn't want to try to build this because of the dependencies and errors I got when I tried, but i did find a cheap renderer online (missing the math plugin) to give a sense of the appearance |

t-vi

left a comment

t-vi

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you for checking the rendering! (I had half-hoped that you'd go through the trouble of installing the doc dependencies and make a habit of improving those things you find imperfect. 😉)

From my point of view, this can go in, unless you want to do something about the less than perfect explanation of concatenated targets.

|

No problem. I will make one more edit tomorrow to address the lengths/concatenation, since there is no rush. Also, does the alternative concatenated form not also apply to inputs? |

|

|

OK, I'll wait for the last change before landing this |

|

Thanks! Two quick comments:

|

|

Ah sorry about that, I will fix it, and use your wording for target_lengths |

|

Looks good! Thanks! But there is a merge conflict for the formatting of the reduction strings. |

|

I fixed the merge conflict. Thanks a lot @rlorigro ! |

facebook-github-bot

left a comment

facebook-github-bot

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@soumith is landing this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

|

Thanks @soumith for fixing the conflict. Does this page get updated whenever the next version of pytorch is officially released: https://pytorch.org/docs/stable/nn.html#ctcloss ? |

|

@rlorigro yes. right now master docs are already updated: https://pytorch.org/docs/master/nn.html#ctcloss |

|

Awesome, thanks. Ah it looks like the kerning on the "math" font is making it almost unreadable in some places. That was unfortunately not rendered in the renderer I used for testing. Also the underscore is being parsed as a subscript in |

|

why is the input size for class is C = 20 which excluding blank? If the network does not predict the blank in the output, why would this loss make sense...

instead of

|

|

I think you are right, it should actually be |

This is meant to resolve #18249, where I pointed out a few things that could improve the CTCLoss docs.

My main goal was to clarify:

targetandinputare needed for masking unequal length sequences, and do not necessarily = S, which is the length of the longest sequence in the batch.I thought about Thomas's suggestion to link the distill.pub article, but I'm not sure about it. I think that should be up to y'all to decide.

I have no experience with .rst, so it might not render as expected :)

@t-vi @ezyang