-

Notifications

You must be signed in to change notification settings - Fork 1.5k

[close #1802] Close listeners on SIGTERM #1808

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

Currently when a SIGTERM is sent to a puma cluster, the signal is trapped, then sent to all children, it then waits for children to exit and then the parent process exits. The socket that accepts connections is only closed when the parent process calls `exit 0`. The problem with this flow is there is a period of time where there are no child processes to work on an incoming connection, however the socket is still open so clients can connect to it. When this happens, the client will connect, but the connection will be closed with no response. Instead, the desired behavior is for the connection from the client to be rejected. This allows the client to re-connect, or if there is a load balance between the client and the puma server, it allows the request to be routed to another node. This PR fixes the existing behavior by manually closing the socket when SIGTERM is received before shutting down the workers/children processes. When the socket is closed, any incoming requests will fail to connect and they will be rejected, this is our desired behavior. Existing requests that are in-flight can still respond. ## Test This behavior is quite difficult to test, you'll notice that the test is far longer than the code change. In this test we send an initial request to an endpoint that sleeps for 1 second. We then signal to other threads that they can continue. We send the parent process a SIGTERM, while simultaneously sending other requests. Some of these will happen after the SIGTERM is received by the server. When that happens we want none of the requests to get a `ECONNRESET` error, this would indicate the request was accepted but then closed. Instead we want `ECONNREFUSED`. I ran this test in a loop for a few hours and it passes with my patch, it fails immediately if you remove the call to close the listeners. ``` $ while m test/test_integration.rb:235; do :; done ``` ## Considerations This PR only fixes the problem for "cluster" (i.e. multi-worker) mode. When trying to reproduce the test with single mode, on (removing the `-w 2` config) it already passes. This leads us to believe that either the bug does not exist in single threaded mode, or at the very least reproducing the bug via a test in the single threaded mode requires a different approach. Co-authored-by: Danny Fallon <[email protected]> Co-authored-by: Richard Schneeman <[email protected]>

|

The maintainers think this looks good, but could use feedback. If you're experiencing issues with closed connections during shutdowns please give this a shot ❤️ |

|

We had this issue, our workaround was to send SIGTERM to nginx(we run puma behind nginx) and then sleep for 15 seconds and send SIGTERM to puma. |

|

Awesome, thanks for the info @adamlogic! |

|

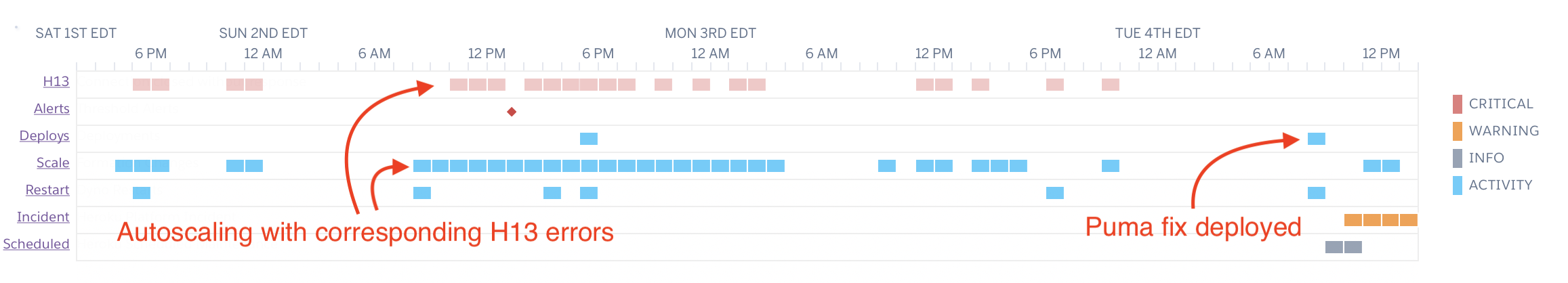

After giving it about 24 hours, I'm definitely seeing different behavior, although I can't quite make sense of it. I typically get 1-2 H13 errors during a downscale or restart, spread throughout the day. Yesterday I only saw one instance of H13 errors, but it was a burst of 37 errors. Quite unusual. I'm hoping that was an anomaly. Going to keep this patch running for now. |

|

There was also an incident at that time, so it might be related? Thanks for running this, keep me updated. |

|

How did we do on the next 24 hours? One theory on where this might be going south is that the default behavior for Puma is not to drain the socket backlog. There's a setting for it though. Just a theory, haven't tested. |

|

Spoke too soon. Got 4 H13 errors last night. Dug into my logs and found this error which correlates exactly with the H13 errors. This is the only instance of this error in my logs, even though I've had many downscale events. LMK if there's any more info that would be helpful. |

|

I’ve seen that error before, it happens because the child got closed before the parent tried to wait on it. When there is no child we should rescue that exception and keep going. Heroku sends TERM to all processes not just the one it spawned. So sometimes the children close before the parent can send a TERM to them. I’ll update later today with a fix for that and let you know. |

On Heroku, and potentially other platforms, SIGTERM is sent to ALL processes, not just the parent process. This means that by the time the parent worker tries to wait on it's children to shut down, they may not exist. When that happens an `Errno::ECHILD` error is raised as seen in this comment puma#1808 (comment). In that situation we don't really care that there's no child, we want to continue the shutdown process in an orderly fashion so we can safely ignore the error and move on.

To avoid a `send` call in `cluster.rb` and to indicate to the maintainers that other classes use this method, we will make it public.

|

@adamlogic I just added 8c78ee2 which should resolve that error. I don't know if that's the cause of the H13 or just covering up some other behavior. If fixing that error doesn't resolve the H13 then I would suggest setting |

|

👍 Thanks! Just deployed with 84ce04d. I'll post another update on Monday. |

On Heroku, and potentially other platforms, SIGTERM is sent to ALL processes, not just the parent process. This means that by the time the parent worker tries to wait on it's children to shut down, they may not exist. When that happens an `Errno::ECHILD` error is raised as seen in this comment puma#1808 (comment). In that situation we don't really care that there's no child, we want to continue the shutdown process in an orderly fashion so we can safely ignore the error and move on.

Issue link: #1802

Currently when a SIGTERM is sent to a puma cluster, the signal is trapped, then sent to all children, it then waits for children to exit and then the parent process exits. The socket that accepts connections is only closed when the parent process calls

exit 0. The problem with this flow is there is a period of time where there are no child processes to work on an incoming connection, however the socket is still open so clients can connect to it. When this happens, the client will connect, but the connection will be closed with no response. Instead, the desired behavior is for the connection from the client to be rejected. This allows the client to re-connect, or if there is a load balance between the client and the puma server, it allows the request to be routed to another node.This PR fixes the existing behavior by manually closing the socket when SIGTERM is received before shutting down the workers/children processes. When the socket is closed, any incoming requests will fail to connect and they will be rejected, this is our desired behavior. Existing requests that are in-flight can still respond.

Test

This behavior is quite difficult to test, you'll notice that the test is far longer than the code change. In this test we send an initial request to an endpoint that sleeps for 1 second. We then signal to other threads that they can continue. We send the parent process a SIGTERM, while simultaneously sending other requests. Some of these will happen after the SIGTERM is received by the server. When that happens we want none of the requests to get a

ECONNRESETerror, this would indicate the request was accepted but then closed. Instead we wantECONNREFUSED.I ran this test in a loop for a few hours and it passes with my patch, it fails immediately if you remove the call to close the listeners.

Considerations

This PR only fixes the problem for "cluster" (i.e. multi-worker) mode. When trying to reproduce the test with single mode, on (removing the

-w 2config) it already passes. This leads us to believe that either the bug does not exist in single threaded mode, or at the very least reproducing the bug via a test in the single threaded mode requires a different approach.