-

Notifications

You must be signed in to change notification settings - Fork 1.5k

Meter calling accept(2) with available pool capacity #1278

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

Talking through this with Nate and Richard, we realized that accepting new clients without taking account for the available capacity of the thread pool doesn't improve throughput, it only hurts it in the case of multiple workers. If a worker pauses (or starts up before other workers), then a worker can accidentally suck up a high number of clients and leave unused capacity inside the other workers. This change will smooth out this issue, with a minor penalty to maximum throughput.

|

Linking #1107 |

|

I think this could really improve performance for a lot of people, so I'm going to start looking at packaging up a new release. |

|

@evanphx and @nateberkopec, thanks for looking into this and looking forward to a puma release :) |

|

👍 since the Jruby bug on master was fixed, I think I can push a 3.9.0 soon. |

|

This will also resolve some potential deadlocking issues for APIs that can sometimes end up calling themselves (i.e. authentication systems that use microservices that need authenticating). 👍👍 |

|

@evanphx @schneems Do you recommend this upgrade for apps that are not yet threadsafe? And does that answer change if the app is also behind the Heroku router? If I have N dynos with X workers each -- and the Heroku router picks a single busy worker in each of my N dynos, would that request fail due to the combination of this PR and a non-threadsafe app? (I've tested this theory with References: |

I actually don't recommend puma for apps that aren't threadsafe, unless it's a step to getting the app threadsafe. I recommend the I've seen performance issues with Puma using X workers and 1 thread compared to Unicorn with X workers. I'm not totally sure why, but i've seen this several times. You could also try passenger. I don't think this patch would hurt if you're only using 1 thread, but i've seen other perf issues with that setup. I would recommend either putting in leg work to get the app threadsafe, or moving to a different server. |

|

|

||

| pool << client | ||

| pool.wait_until_not_full unless queue_requests | ||

| pool.wait_until_not_full |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Was there a reason queue_requests was removed here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think the reason is explained adequately in the OP?

| # If we can still spin up new threads and there | ||

| # is work queued, then accept more work until we would | ||

| # spin up the max number of threads. | ||

| return if @todo.size < @max - @spawned |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is not an equivalent of initial condition. With this change puma serving a single-threaded app with "threads 1,1" attempts to pass a request to a worker that is already serving a request. We're seeing occasional deadlocks for the requests coming right after a long-running request in our environment with "threads 1,1", "queue_requests false", and "workers 5" configuration. Rolling back this single commit to the original solves the issue completely.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In the case of a single-threaded worker, @waiting will be 0, @todo.size will be 1, @max will be 1 and @spawned will be 1, so the condition evaluates to 1 < 1 - 1, which is zero, so Puma waits here.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The problem is in line:

+ return if @waiting > 0

This is not how the original condition worked: until @todo.size - @waiting < @max - @spawned or @shutdown. The fact that @waiting > 0 is not enough for decision not to do @not_full.wait @mutex.

I prepared a simple test scenario which demonstrates the problem: https://gist.github.com/ivanpesin/f40a76e51a60a83f1190113fdecddaa5

With this commit, second request gets always blocked until first request is served, even though there are available workers. Third requests goes to available worker.

With original condition, second request goes to next available worker and works as expected. The condition needs to be either reverted to original form or needs logic correction.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We also had this issue.

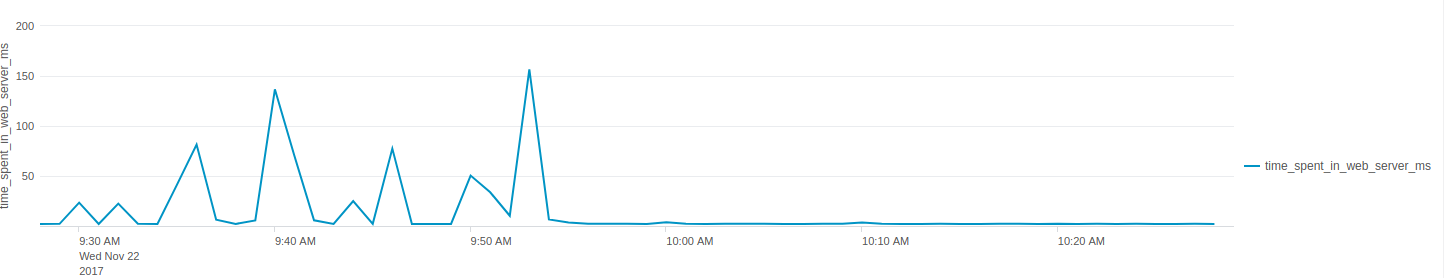

Our setup is using 16 workers with 1 thread each running with an nginx server in front. We were getting high wait times between nginx and rails as measured by a middleware similar to the one at #1405 (comment). We partially undid the change in this pull request with the patch below and our wait times dropped as can be seen by the attached screenshot.

module PumaMonkeypatch

def wait_until_not_full

@mutex.synchronize do

until @todo.size - @waiting < @max - @spawned or @shutdown

@not_full.wait @mutex

end

end

end

end

Puma::ThreadPool.prepend(::PumaMonkeypatch)Thoughts on how to proceed? Should we file a new issue?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we file a new issue?

Please do.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks. Ticket created: #1471

|

If I'm understanding this change correctly: Previously Now In effect, this leaves requests unaccepted (in the socket) instead of accepted and waiting for processing by the worker ("in" the Around the water cooler, it seems some are concerned that To clarify, my understanding is that there is still benefit in having the Is this correct? |

That is correct. |

As part of the work in puma#1278 we refactored the conditional in `ThreadPool#wait_until_not_full` because we found it difficult to reason about at the RailsConf '17 roundtable. We broke it up a bit and everyone went away happier. However it turns out that you need to account for both `@waiting` and `@todo.size` together to make a determination on whether to accept more work from the socket and the refactor was not equivalent to the old code. There are no guarantees that request threads that have been signalled due to work being added to the thread pool's queue will become active, take the mutex and decrement `@waiting` before the main worker's thread runs `ThreadPool#wait_until_not_full`. If we run that before the request thread decrements @waiting we end up hitting the early exit of `return @waiting > 0`, taking more work off the socket than the pool's capacity. The correct way to determine the number of requests we have queued in the pool that are outstanding is `@todo.size - @waiting`, so I've reverted to the old behaviour and augmented the comment above it.

In PR puma#1278 this was changed to avoid using the "queue_requests" params, so workers only accept new connections if they have a free thread. This commit reverts that. I guess that change was beneficial for the most typical deployment scenarios, but it has a noticeable performance penalty in the case of Apisonator given that it is single threaded. Even with a moderate number of connections there are lots of time-outs.

In PR puma#1278 this was changed to avoid using the "queue_requests" params, so workers only accept new connections if they have a free thread. This commit reverts that. I guess that change was beneficial for the most typical deployment scenarios, but it has a noticeable performance penalty in the case of Apisonator given that it is single threaded. Even with a moderate number of connections there are lots of time-outs.

In PR puma#1278 this was changed to avoid using the "queue_requests" params, so workers only accept new connections if they have a free thread. This commit reverts that. I guess that change was beneficial for the most typical deployment scenarios, but it has a noticeable performance penalty in the case of Apisonator given that it is single threaded. Even with a moderate number of connections there are lots of time-outs.

In PR puma#1278 this was changed to avoid using the "queue_requests" params, so workers only accept new connections if they have a free thread. This commit reverts that. I guess that change was beneficial for the most typical deployment scenarios, but it has a noticeable performance penalty in the case of Apisonator given that it is single threaded. Even with a moderate number of connections there are lots of time-outs.

Talking through this with @nateberkopec, @schneems, @FooBarWidget, and others at RailsConf , we realized that accepting new clients without taking account for the available capacity of the thread pool doesn't improve throughput, it only hurts it in the case of multiple workers. If a worker pauses (or starts up before other workers), then a worker can accidentally suck up a high number of

clients and leave unused capacity inside the other workers.

This change will smooth out this issue, with a minor penalty to maximum throughput.