-

Notifications

You must be signed in to change notification settings - Fork 4.7k

Description

We are having a critical issue in gpt-neox where the checkpoint loading from deepspeed isn't working correctly. When we reload the model from a checkpoint, the loss suggests it hasn't loaded anything at all, and is just starting again from initialization... (jumps up from ~4 at checkpoint save to 8 at checkpoint load)

The model is based on the megatron example in DeepSpeedExamples, and the checkpoint saving / loading as far as I'm aware, hasn't changed at all from there (and should be handled almost entirely by model_engine.save_checkpoint / load_checkpoint.)

It happens both with the pipeline parallel module, and without.

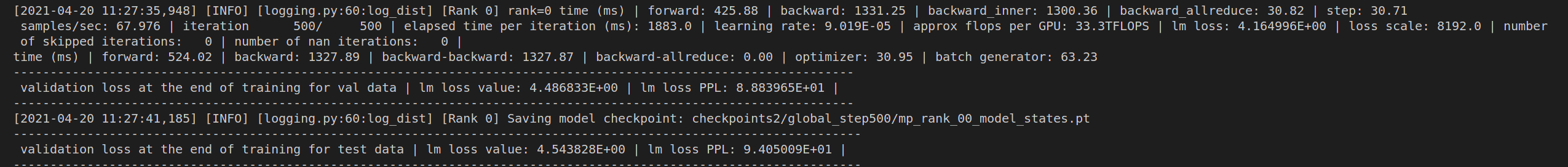

You can see here an example of training loss before saving a checkpoint

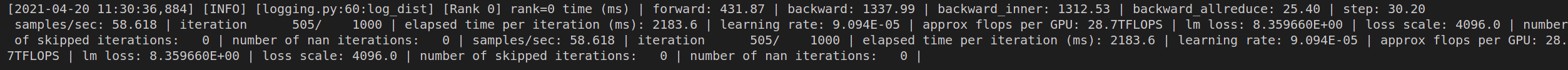

and then the loss directly afterward:

Would appreciate any help to decipher what's going on here.

We're technically using our deepspeed fork but there are no modification to the checkpoint saving / loading logic there either.

This is about as minimal a reproduction as i can give right now:

first set up the environment in bash:

(this is assuming you're running on an aws machine, but should work on any base image with torch + cuda 11.1, just comment out that first line)

# first activate the correct AWS env with torch 1.8.0 + cuda 11.1

source activate pytorch_latest_p37

# clone repo and cd into it

git clone https://github.com/EleutherAI/gpt-neox

cd gpt-neox

# install other requirements

pip install pybind11==2.6.2 six regex numpy==1.20.1 nltk==3.5 \

-e git+git://github.com/EleutherAI/DeeperSpeed.git@0e9573776caf7227ecafdc8a3d57c7955165d8e2#egg=deepspeed \

zstandard==0.15.1 cupy-cuda111 mpi4py==3.0.3 wandb==0.10.21 einops==0.3.0 transformers tokenizers lm_dataformat triton==1.0.0.dev20210329

# install apex

pip install -v --disable-pip-version-check --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" git+https://github.com/NVIDIA/apex.git@e2083df5eb96643c61613b9df48dd4eea6b07690

# prepare dummy gpt2 data

python prepare_data.pythen in a python script, train a small model and try to continue training:

from yaml import load, dump

try:

from yaml import CLoader as Loader, CDumper as Dumper

except ImportError:

from yaml import Loader, Dumper

import os

# modify train iters in small.yml to 500

with open('configs/small.yml', 'r') as f:

data = load(f, Loader=Loader)

data.update({'train-iters': 500})

with open('configs/tmp.yml', 'w') as f:

f.write(dump(data))

# start run

os.system('./deepy.py pretrain_gpt2.py -d configs tmp.yml local_setup.yml')

# kill any hanging processes

os.system("pkill -f deepy.py")

# update train iters again

with open('configs/small.yml', 'r') as f:

data = load(f, Loader=Loader)

data.update({'train-iters': 550})

with open('configs/tmp.yml', 'w') as f:

f.write(dump(data))

# start run again

os.system('./deepy.py pretrain_gpt2.py -d configs tmp.yml local_setup.yml')