-

-

Notifications

You must be signed in to change notification settings - Fork 750

Set runspec on all new tasks to avoid deadlocks #4432

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

I notice that this is still in WIP status. Are the changes here an improvement over what is in master? If so, I might press the "Ready for review" button |

|

I'll note that the failures in |

Also, check that we don't release keys with dependents

|

I'm currently testing the latest commit on a few clusters in parallel. So far, no funny logs, no deadlocks, no problems at all. |

|

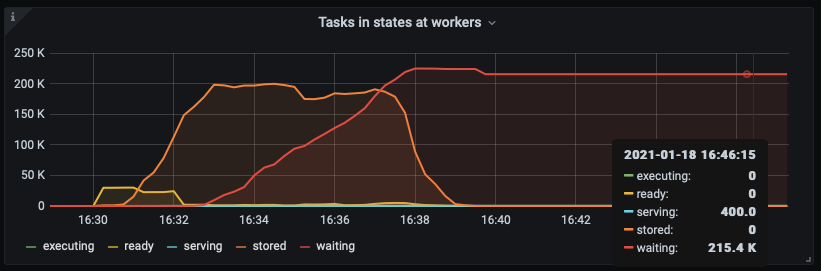

The only thing I could observe, so far is that the waiting_for_data_count is off but I checked the workers, both data and tasks are empty and I suspected a bug in this counter already. I believe this is not critical, though, since the counter is not used internally, merely for instrumentation (The following graph shows states as exposed by prometheus. Not identical to internal states) |

|

That's great news

…On Mon, Jan 18, 2021 at 8:49 AM Florian Jetter ***@***.***> wrote:

The only thing I could observe, so far is that the waiting_for_data_count

is off but I checked the workers, both data and tasks are empty and I

suspected a bug in this counter already. I believe this is not critical,

though, since the counter is not used internally, merely for instrumentation

[image: image]

<https://user-images.githubusercontent.com/8629629/104942900-48302700-59b5-11eb-9c62-2d5690ee3537.png>

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

<#4432 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AACKZTDWI65NH6CXLLSSXCTS2RRAFANCNFSM4WEK5ONQ>

.

|

975caac to

1fc1b46

Compare

|

Needed to push another time. There was actually a typo on my side causing a test to fail. |

|

Unfortunately, I needed to push another small change but an expected one where we didn't clear I'm dropping out now but if there is no big CI thing popping up again, I feel confident merging this now. I'm not sure if it fixes all issues but it should provide a significant improvement to master from what I've seen. |

|

@fjetter are there other issues that you know exist? If so I'm happy to take a look at them today. |

|

On the other PR I originally wanted to fix an issue with erroneous tasks which led me to discover this runspec thing. I added a test case with an object with broken deserialization. That should hit the worker internal suspicious counter (but it doesn't/didn't) something else might reveal itself there. Otherwise I don't have anything on my mind right now |

|

OK, once tests pass here I'm going to merge. If things are ok for a bit I suggest that we release. cc @jrbourbeau |

|

I briefly tried things with the exercise in #4430 and things didn't complete. I need to double check that work though. |

|

Ah, indeed, things with that workflow are good for me too as well now. Good for a release whenever makes sense @jrbourbeau . I suspect that you're aiming for this Friday. |

|

xref dask/community#121 |

This is a more minimalistic version of #4360 with fixes I am pretty confident with. I still can see local issue, though and this is incomplete.