-

-

Notifications

You must be signed in to change notification settings - Fork 1.8k

Closed

Description

For the data I am reading, the path (directory name) is an important trait, and this would be useful to access (possibly as an additional column, path_as_column = True) or at the very least in the collection of delayed objects

import dask.dataframe as dd

dd.read_csv('s3://bucket_name/*/*/*.csv', collection=True)

The collection version of the command comes closer

all_dfs = dd.read_csv('s3://bucket_name/*/*/*.csv', collection=True)

print('key:', all_dfs[0].key)

print('value:', all_dfs[0].compute())

but returns an internal code as the key and doesn't seem to have the path (s3://bucket_name/actual_folder/subfolder/fancyfile.csv) anywhere

key: pandas_read_text-35c2999796309c2c92e6438c0ebcbba4

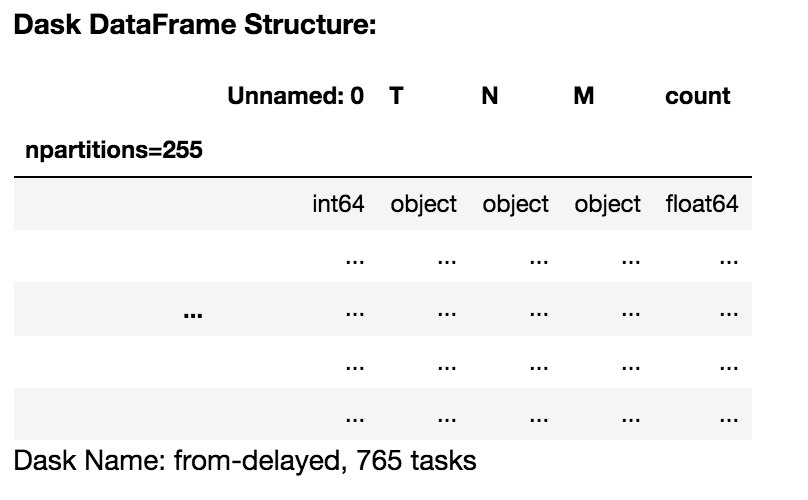

value: Unnamed: 0 T N M count

0 0 T1 N0 M0 0.4454

1 5 T2 N0 M0 0.4076

2 6 T2 N0 M1 0.0666

3 1 T1 N0 M1 0.0612

4 10 T3 N0 M0 0.0054

Metadata

Metadata

Assignees

Labels

No labels