-

Notifications

You must be signed in to change notification settings - Fork 38.7k

Bump minrelaytxfee default #6793

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

To bridge the time until a dynamic method for determining this fee is merged. This is especially aimed at the stable releases (0.10, 0.11) because full mempool limiting, as will be in 0.12, is too invasive and risky to backport.

|

ACK both bumping and value (though I'm not dead committed on a particular value). This is what we release-note recommended on 0.11. |

|

Agreed. |

|

ACK for 0.10 and 0.11. I hope this is not needed for 0.12... |

|

ACK. I think I prefer a lower number (2000 or 2500), but do not have objection to 5000 if thats more popular. |

|

@TheBlueMatt ahh, that was part of my objection to the higher number. we might want higher than 1000 as just a relay fee anyway though.... ugh.. we need some better way than just hardcoding it. |

|

@TheBlueMatt Why would you care about carrying this in git master until the dynamic stuff is merged? Dynamic PR when rebased on this can simply set it back. |

|

I'd hope to get rid of the entire hardcoded value before 0.12 |

|

@TheBlueMatt Right, if you want a different meaning for it in 0.12, probably best to rename it too. But yes, discussion belongs there. @morcos No problem changing it to 2500. |

|

@laanwj Let's stick with 5000. To be slightly pedantic, you could view raising it to 5000 so mempools don't blow up as changing the meaning of it. 1000 is perfectly sufficient as a relay fee for now. Almost every transaction that has ever been transmitted with a fee over 1000 has been mined, so that implies it's almost too HIGH of a fee as a min relay fee. But I like raising it to 5000 and then revisiting in the context of #6722, possibly even lowering it again in backports in the future. |

|

|

Does it means this change will make every (except OP_RETURN) 600 satoshi TxOut Dust ? If this is the case, this is a very big change for every colored coin protocols, since every colored coin wallets are using 600 satoshi output for carrying the color. (at least OA) I understand this is not your priority, but the goal of this PR, I think, is to protect against spammy transaction who does not have enough fees, but it impacts way more than that. :( |

|

@NicolasDorier No one really likes doing this, but as nodes are crashing we have to act in some way, and this is the only knob that can be quickly adjusted that brings down the transaction spam. It is meant to be temporary. Decoupling dust from minRelayTxFee would be possible (what would the other consequences of this be?) but we really want to get a release out soon (due to the upnp vulnerability), so if there is no better solution today, I'm going to merge this one. Edit: decouping the dust threshold from mintxfee makes no sense as long as dust is defined as "output that is expected to cost more to spend than it transfers", so that would be no easy change, it would need a complete re-definition of what Dust means. |

|

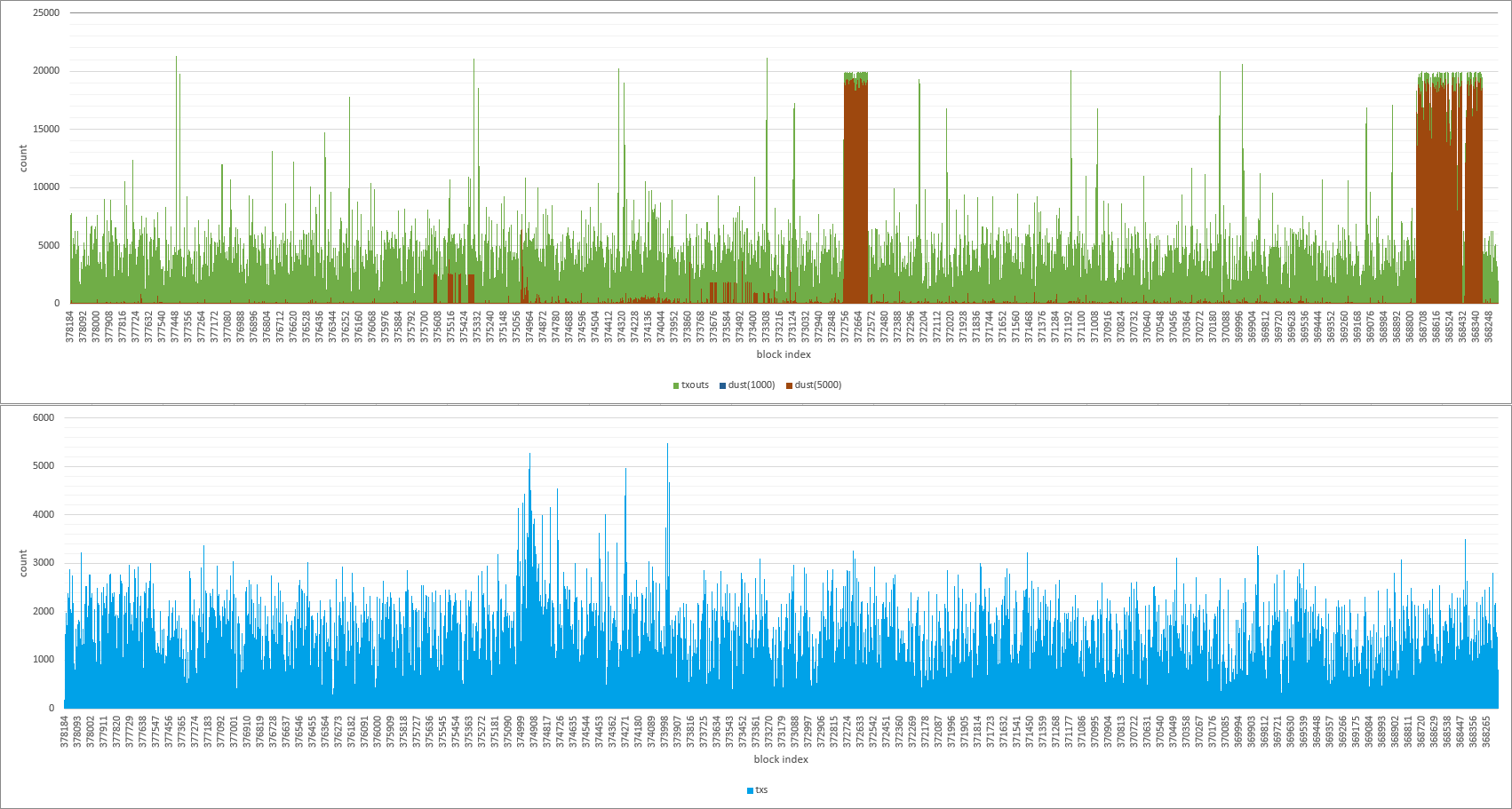

Yes @NicolasDorier, and this also affects other meta-layer protocols such as Counterparty, Omni, ChanceCoin, NewbieCoin, .... However, I believe this change is mostly intended to avoid mempool bloat, and as long as there are still a few nodes (and miners!) accepting the transactions, it hopefully has no "blackout" effect. I acknowledge the need for a solution like this, at least temporarily, but I was kinda missing a justification (i.e. it's not really mentioned, why 5000 is better than 1000, or whether this actually solves something), so I checked the impact of changing the It's not a great chart, but it shows that there were massive spikes of outputs, which would have been rejected, so even if I don't really like it either, it seems to work. Haven't checked, whether this matches the time of "attacks" though. |

|

It works because it makes spamming your mempool full 5 times as expensive. This answers the "why is it better than 1000" part. In practice quite a few people have been using this value and it has apparently avoided their mempool sizes from getting out of hand. It may be too high though - more then is needed to prevent the current floods, as said, I'm not wedded to the specific value. Again, if you have a better solution quickly, that may be preferable to this one. If not, I'm going to merge this. |

Given that these protocols use very-close-to-dust values, from that perspective it probably doesn't matter, whether it's 1500 or 5000, so 5000 seems to be as good (or bad) as any other value above the old default.

Unfortunally I don't, but as mentioned, I acknowledge the need for a temporarily solution, so no objections from my side. |

|

Just in case, if anyone is wondering, what the new dust thresholds with

|

|

I just put out PR #6803 if anyone wants to take a look. It On 10/10/2015 5:30 AM, Wladimir J. van der Laan wrote:

|

|

@laanwj I understand the need for this. What I am saying is that every times you do a change on dust it requires enormous amount of change/build/update for all meta protocol. This is worse for us than a hard fork, because for the hardfork you only need to update the node. One alternative is to hardcode the limit of "Dust" to what it was before, I think you are raising this limit temporary anyway. The worse is that it is temporary ! Which mean that we will again need to go through the pain soon. If it is temporary, can't you change the Dust in a hardcoded value temporarily as well ? The dust also does not change a lot for spamming ! Because you can just send those 600 satoshi to yourself so it does not cost you anything. |

|

Prefer 0.0001 BTC for the value, but utACK. @NicolasDorier This is just node policy. Nothing should ever be hard-coded to assume anything. I'm also unaware of any BIP (even draft) that does. For both of these reasons individually, there is no reason to consider it a concern. |

|

@luke-jr, let me know a solution about how to fix all those protocols which does not care about nValue so that we don't get hit by a the nuke collaterally next time. |

|

@NicolasDorier What protocols? Like I said, I am not aware of any BIP draft that cares about this. |

|

Counterparty, Omni, ChanceCoin, NewbieCoin, OpenAsset, EPOBC, Colu, in fact anything that does not care about "nValue". |

|

Where are the BIP drafts for those? If they don't have one, I assume they're pre-alpha and can change on a dime drop. If they don't care about nValue, this change shouldn't affect them anyway. |

|

It is. They use 600 satoshi for every TxOut. When v0.12 will get out then they'll need to go to the whole rebuild/redeploy process, which is way more painful than a hardfork. Well, I don't think I'll make you change your mind anyway, we'll take the blow, not knowing when it will happens again. |

|

I personally have no complaints about 5000 or even 1000 satoshi. However, I think this is a band-aid and does not address the real problem, which is that mempools will keep getting filled by tx using the lowest allowed fees. The right solution for this is to have near-dust transactions as "Fill or Kill". That is, if a transaction is not confirmed within n blocks (say 5), it is dropped from the mempool (for non-high priority). This will require the sender to re-send. The number of blocks can be a function of the fee. i.e. 1 satoshi = 1 block, 10 satoshi = 2 blocks, 100 satoshi = 3 blocks, 1000 satoshi = 4 blocks. 4000 satoshi can be 1 day. Anything over 4000 can be treated 'business as usual' |

|

Changing network relay rules suddenly is an drastic measure. (although not necessarily unwarranted in drastic times) Many wallets will be forced to rush out updates, and even more users will find their software broken until they update. Getting 100% of users to update or reconfigure their software is a long process. Thankfully we have an api hook in place for breadwallet to bump fees remotely (within a hard coded range limit for security) |

|

@voisine There are no relay rules, just relay policy, which has always been decided on a per-node basis by the node operator and should never be relied on to be consistent. |

|

but as a wallet operator if you offer users the option to choose an (calculated) 'low priority' fee then knowing that a big part of the network runs a certain default means you'll get it relayed. 0.00005 will be higher then utACK but I also agree that hardcoding dust or otherwise documenting the reasoning behind it would be very helpful for the meta protocols |

|

@luke-jr, indeed, it's up to each node operator to decide if they want to follow standard policy and help, or to diverge and hinder the functioning of p2p gossip network. For the network to be useful for wallets, nodes need to follow a predictable relay policy that wallets can adhere to. Otherwise they'll need to find some alternate method of getting transactions to miners. |

|

This will break many wallets that do not fetch fee data from a central server, as BreadWallet does. |

|

Even if we raise the fee, with enough volume, mempools will be full of Tx that are not confirmed in a block. These transactions will languish in mempools indefinitely and the bitcoins in them are in a state of limbo for days. The solution can't be to keep raising the fee until we are more expensive than paypal. Bitcoins hanging in mempools for indefinite duration is bad for meta-protocols, micro-payments, and possibly hundreds of innovations in progress right now. Fill or Kill is a solution from the fiat world that deterministically releases these coins and allows the original sender to retry with a larger fee. This releases us from having to hard-code a fee limit and keep changing it with any periodic fluctuations in tx volume and value of bitcoin. We should also consider that the class of solutions encompassing simply changing a min fee, dust-threshold, etc. are band-aids. We need to acknowledge that the current implementation is not addressing a given use case and find a solution for it. |

|

Does the relay penalty on transactions containing a low value ("dust") output apply to transactions that pay a minimum or better network fee? |

Yes. A node, which considers an output as dust, won't relay the transaction, even with high transaction fees. |

Thanks for the info. Doesn't that seem a little overbearing? If a transaction is paying sufficient network fee, it seems to me it should be exempt from dust spam filtering, because the network fee puts a real cost on spammy transactions. |

|

@dthorpe Just because it has a real cost doesn't mean it should be relayed or mined. Also remember the standard fees today do not nearly cover the cost of transactions, nor are they paid to the people affected by them. |

|

@dthorpe: actually it's pretty interesting in my opinion. The general idea behind "dust" is that no output should be "uneconomic", i.e.: it should not cost more to spend an output than the output is worth (if this is really satisfied on a larger scale is a different question, but anyway..). Consider for example a transaction with high fee, but outputs with only 1 satoshi. This would be fine, if the transaction fees were zero, but otherwise probably no one would be inclined to spend the 1 satoshi outputs (which would ultimately lead to utxo bloat). |

|

@dexX7 So the main rational with the dust is only to prevent utxo bloat ? Saying that dust is uneconomic to spend is untrue with colored coin protocols. It seems there is massive complexity in preparation for a floating minRelayTxFee, when really I doubt it gives any advantage, except a potential UTXO bloat. But UTXO size is negligible compared to the blocks. But let's admit : "Saying that dust is uneconomic to spend is untrue with colored coin protocols." is true. If that is the case, dust should not be bound with minRelayTxFee but with the current fee rate, whatever it is at the moment of broadcast. This would simplify the code a bit, and would be consistant with the goal of not having "uneconomic output" and spare us with yet another magic default which can have some dramatic impact. (yes, I know people should not rely on the default in theory, but they do in practice) As I said this rushed decision have more impact than a hardfork for wallet. If you have a coin of X btc and the user wants to send (X - 1000 satoshi) BTC, then before your modification, the change of 1000 satoshi would be sent back to the user, but after your modification, the change would prevent the transaction from spreading. So it requires everyone to rebuild/redeploy all of our users. The interesting thing to note is that it is impossible in theory to know if an output will be more costly than its value to spend, because the price to spend depends on the fee rate at the time of spending, which can't be predicted. But well, I guess this is not a better approximation to use the fee rate instead of minRelayTxFee for that. |

True, there are always other factors in play. I was referring to the network fee as an unrecoverable real cost as a spam deterrent.

I'm well aware of that. That seems like a sustainability problem, no? My understanding is that network fees exist primarily to provide tx prioritization and spam deterrence. The economic model of my business-built-on-the-Bitcoin-blockchain (which uses OpenAsset colored coins, btw) is prepared to pay higher fees to support the network. I can only hope that such fees will eventually go towards supporting the network as a whole (validating full nodes) rather than just the miners. |

That's my understanding, yes, and to some degree that coins are not "lost" (e.g. consider what might happen, if there were millions of uneconomic outputs, hehe). There was quite a bit of discussion, which may be interesting to read: #2351, #2577 (also discusses colored coins)

Sorry, can you please clarify? Mined outputs, or blocks can be prunned, while you can't just remove entries from the UTXO, which makes UTXO space more "expensive" than block space.

I agree, but I'm not sure if this could be generalized. It's an exceptional case.

I see where you're going: since the economic value of an output, when spending, is affected by the transaction fees, this should be the basis to determine the economic value. As such, this sounds reasonable to me. It probably doesn't make sense, if the thresholds were based on the fees of the sending transaction (e.g. think about a transaction with very high fee for fast confirmation - how does this relate to the spender at all?), but instead based on the context when spending (e.g. mempool size etc.). It seems to overlap, once mempool space becomes limited based on transaction fees though.

You may assume certain defaults (or widely used values), and leverage that, but relying on it doesn't seem solid, and breaks, once the values change, either locally, say when a single node has a different policy, or more global, if default values change, and a large part of the network uses the new default. Since your mostly concerned about OA, I'm actually wondering how this is an issue for you, which is Let's say there were a network wide default static transaction fees of 0.00001 BTC, and you built a wallet, which always sends transactions with 0.00001 BTC transaction fees. At some point the default fees are raised to a static value of 0.00005 BTC. Or let's say, at some point the default fees are no longer static, but instead floating. Wouldn'd you agree that a wallet that assumes transaction fees of 0.00001 BTC should be overhauled at this point? Your situation is similar in my opinion. |

I agree on this point, but dynamic fee is something people had time to adapt to. (even faster thanks to the spam attacks) Say you want to send 1000 satoshi to Alice, but have a coin of 2100 satoshi. A wallet with MinTxRelayFee of 1000 will send the 1100 satoshi of change. So my point are the following :

The fee rate is a widely available value nowadays contrary to MinTxRelayFee, and also has been largely tested.

I was talking about non-pruned node, where the UTXO can't be bigger than the block directory anyway. For pruned one, this is a whole other discussion, but briefly with pruned node you can't download blocks or merkle blocks from them (the service bit Network is not set).

If I understand what you mean, you are saying it makes sense to block the spending of an uneconomical output. If that is so, I don't agree because spending an output always reduce UTXO size and should never be denied. (also you don't want user not knowing whether they will be able to spend their coins in the future) What I meant is really doing just like now : blocking at the creation of uneconomic output, except based on the fee of the transaction instead of MinTxRelayFee. So we don't have external state to get to know what the dust is, and the dust value evolve nicely with how much it would cost to spend the output. (you can't really know how much it will cost to spend in the future, but at least you have the best guess you can get by using the fee of the transaction) It makes sense to use the transaction fee instead of the fee rate, because the decision whether the transaction was accepted or not is priory based on the fee rate.

I will check that. |

|

The dust rule is about creating uneconomical outputs, not about spending |

|

In the long term it is unmaintainable that every node has every block Being able to independently verify that nobody in the network is cheating Note that it is not just about storage. To validate blocks quickly, you So, no, keeping the UTXO set small is absolutely in the best interest of |

Sorry, no, this was probably a bit unclear: spending should not be blocked, but to determine whether an output is uneconomic or not, the context when spending the output comes into play. It's given that only a) historical data can be used, and b) the data that is available when sending. You mentioned that the threshold may solely be based on transaction fees of the sending transaction (which tackles b, but not a), and my point basically was that this is seems like a too limited perspective, which is not necessarily related to the actual economic value at all.

Are you referring to the bump of the PR? I'm not sure, if this will turn out to be effective, and it doesn't seem like a good longer term solution, but it may make spamming more difficult, and the higher threshold would have buffered the larger spikes (see #6793 (comment)). |

|

Ok, thanks sipa I see where pruning is going now, it convinces me that dust is useful. I was reflecting on the current state which is black or white. @dexX7 You are already using indirectly historical data b) if you use only the transaction fee as base for dust definition, because nowadays always, the transaction fee is already taking into account historical data. I opened #6824 to prevent hijacking the PR discussion. |

Bump minrelaytxfee default to bridge the time until a dynamic method for determining this fee is merged.

This is especially aimed at the stable releases (0.10, 0.11) because full mempool limiting, as will be in 0.12, is too invasive and risky to backport.

The specific value (currently 0.00005) is open for discussion.

Ping @gmaxwell @morcos

Context: https://github.com/bitcoin/bitcoin/blob/v0.11.0/doc/release-notes.md#transaction-flooding