-

Notifications

You must be signed in to change notification settings - Fork 16.3k

Description

Apache Airflow version

2.2.1

What happened

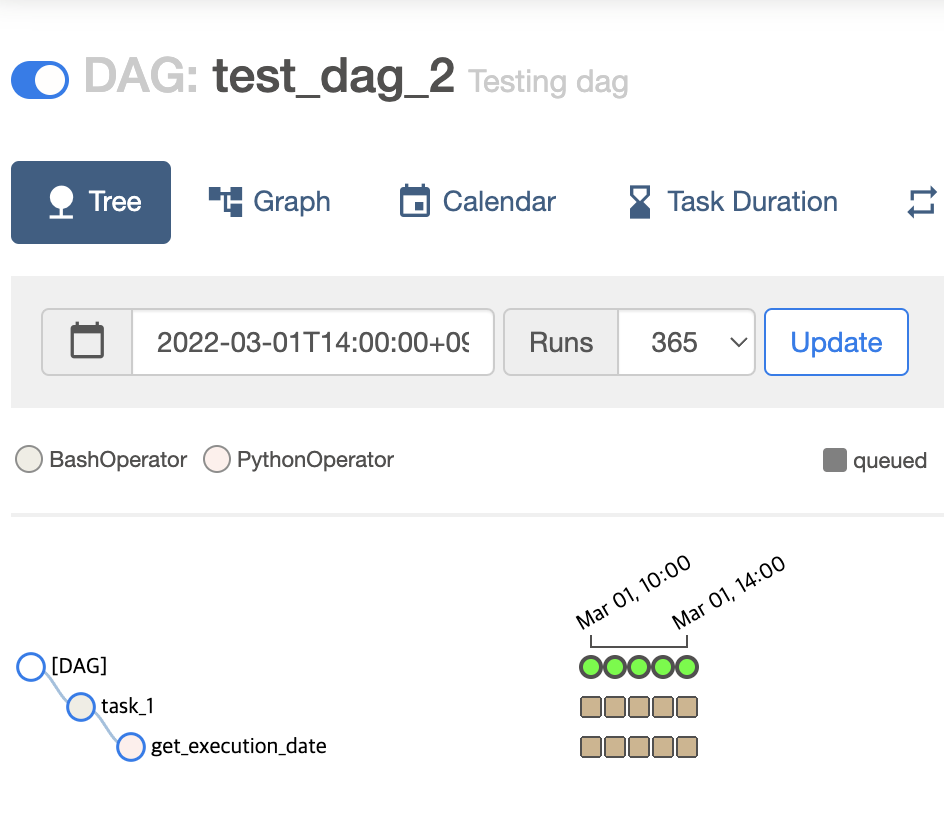

When I try to backfill using airflow dags backfill ..., the 'Run' shows running but the tasks are stuck in scheduled state forever.

- Code for DAG to reproduce the problem

import time

from datetime import timedelta

import pendulum

from airflow import DAG

from airflow.decorators import task

from airflow.models.dag import dag

from airflow.operators.bash import BashOperator

from airflow.operators.dummy import DummyOperator

from airflow.operators.python import PythonOperator

default_args = {

'owner': 'airflow',

'depends_on_past': False,

'email': ['[email protected]'],

'email_on_failure': True,

'email_on_retry': False,

'retries': 2,

'retry_delay': timedelta(minutes=5),

}

def get_execution_date(**kwargs):

ds = kwargs['ds']

print(ds)

with DAG(

'test_dag_2',

default_args=default_args,

description='Testing dag',

start_date=pendulum.datetime(2022, 4, 2, tz='UTC'),

schedule_interval="@hourly", max_active_runs=5, concurrency=10,

) as dag:

t1 = BashOperator(

task_id='task_1',

depends_on_past=False,

bash_command='sleep 5'

)

t2 = PythonOperator(

task_id='get_execution_date',

python_callable=get_execution_date

)

t1 >> t2- Airflow backfill CLI

$ airflow dags backfill --subdir /opt/airflow/dags/repo --reset-dagruns -s "2022-03-01 01:00:00" -e "2022-03-02 01:00:00" test_dag_2- Result

- Discussion

The 'Run' shows running but the tasks are stuck in scheduled state forever.

Here are some variants what I have checked. (but still have this issue.)

- max_active_runs, concurrency

- -x option for backfill command

- --subdir option for backfill command

- --reset-dagruns for backfill command

- schedule_interval to

Noneand@once - Restart the Airflow scheduler pods.

- Upgrade Airflow version to 2.3.0.

- Related issue

Task stuck in "scheduled" or "queued" state, pool has all slots queued, nothing is executing #13542

What you think should happen instead

The backfill jobs should successfully done from the past.

How to reproduce

No response

Operating System

CentOS Linux release 7.9.2009 (Core)

Versions of Apache Airflow Providers

apache-airflow-providers-amazon 3.3.0

apache-airflow-providers-celery 2.1.0

apache-airflow-providers-cncf-kubernetes 3.0.2

apache-airflow-providers-docker 2.6.0

apache-airflow-providers-elasticsearch 3.0.3

apache-airflow-providers-ftp 2.1.2

apache-airflow-providers-grpc 2.0.4

apache-airflow-providers-hashicorp 2.2.0

apache-airflow-providers-http 2.0.2

apache-airflow-providers-imap 2.2.3

apache-airflow-providers-postgres 4.1.0

apache-airflow-providers-redis 2.0.4

apache-airflow-providers-sendgrid 2.0.4

apache-airflow-providers-sftp 2.6.0

apache-airflow-providers-slack 4.2.3

apache-airflow-providers-sqlite 2.1.3

apache-airflow-providers-ssh 2.4.3

Deployment

Official Apache Airflow Helm Chart

Deployment details

No response

Anything else

Here are some logs from scheduler pod, found kubernetes_executor try to delete worker pod.

[2022-05-13 02:48:08,417] {kubernetes_executor.py:147} INFO - Event: testdag2task1.34ae944f04ee4a18a65f559163ca0d1a had an event of type MODIFIED

[2022-05-13 02:48:08,417] {kubernetes_executor.py:206} INFO - Event: testdag2task1.34ae944f04ee4a18a65f559163ca0d1a Succeeded

[2022-05-13 02:48:08,423] {kubernetes_executor.py:147} INFO - Event: testdag2task1.34ae944f04ee4a18a65f559163ca0d1a had an event of type DELETED

[2022-05-13 02:48:08,423] {kubernetes_executor.py:206} INFO - Event: testdag2task1.34ae944f04ee4a18a65f559163ca0d1a Succeeded

[2022-05-13 02:48:08,497] {backfill_job.py:397} INFO - [backfill progress] | finished run 0 of 25 | tasks waiting: 5 | succeeded: 0 | running: 5| failed: 0 | skipped: 0 | deadlocked: 0 | not ready: 5

[2022-05-13 02:48:13,390] {kubernetes_executor.py:375} INFO - Attempting to finish pod; pod_id: testdag2task1.34ae944f04ee4a18a65f559163ca0d1a; state: None; annotations: {'dag_id': 'test_dag_2', 'task_id': 'task_1', 'execution_date': None, 'run_id': 'backfill__2022-03-03T02:00:00+00:00', 'try_number': '1'}

[2022-05-13 02:48:13,391] {kubernetes_executor.py:375} INFO - Attempting to finish pod; pod_id: testdag2task1.34ae944f04ee4a18a65f559163ca0d1a; state: None; annotations: {'dag_id': 'test_dag_2', 'task_id': 'task_1', 'execution_date': None, 'run_id': 'backfill__2022-03-03T02:00:00+00:00', 'try_number': '1'}

[2022-05-13 02:48:13,392] {kubernetes_executor.py:576} INFO - Changing state of (TaskInstanceKey(dag_id='test_dag_2', task_id='task_1', run_id='backfill__2022-03-03T02:00:00+00:00', try_number=1), None, 'testdag2task1.34ae944f04ee4a18a65f559163ca0d1a', 'jutopia-chlee-test-chlee-backfill-test', '3559062271') to None

[2022-05-13 02:48:13,396] {kubernetes_executor.py:661} INFO - Deleted pod: TaskInstanceKey(dag_id='test_dag_2', task_id='task_1', run_id='backfill__2022-03-03T02:00:00+00:00', try_number=1) in namespace jutopia-chlee-test-chlee-backfill-testAre you willing to submit PR?

- Yes I am willing to submit a PR!

Code of Conduct

- I agree to follow this project's Code of Conduct