Topic: In this post, I break down the standards shaping the field, the tradeoffs in different architectural choices, and some best practices I’ve learned along the way.

Core Questions:

- What are the standards and schemas used in security data modeling today?

- How do different architectural approaches compare in strengths and weaknesses?

- What best practices can help avoid common pitfalls and ensure scalability?

Let me start off by saying all data modeling standards are crap. Literally all of them are wrong. The only question is whether it is wrong in a way you can live with.

Think about it. They are slow to adapt, full of compromises, and often shaped more by vendor politics than real-world operational needs. Every schema leaves gaps, forces awkward fits, or overcomplicates simple problems. By the time a standard gains adoption, the threat landscape and technology stack have already changed.

My advice? Treat them as a baseline, not gospel, and be ready to bend or break the rules when they get in the way of solving the real problem.

That is exactly why I put together this post. Below, I break down the most widely used standards in security data modeling, what they do well, where they fall short, and how to choose (and adapt) an approach that fits your team’s needs. We will also look at the major architectural decisions every organization faces, along with practical recommendations for building models that can survive contact with the real world.

Common Standards

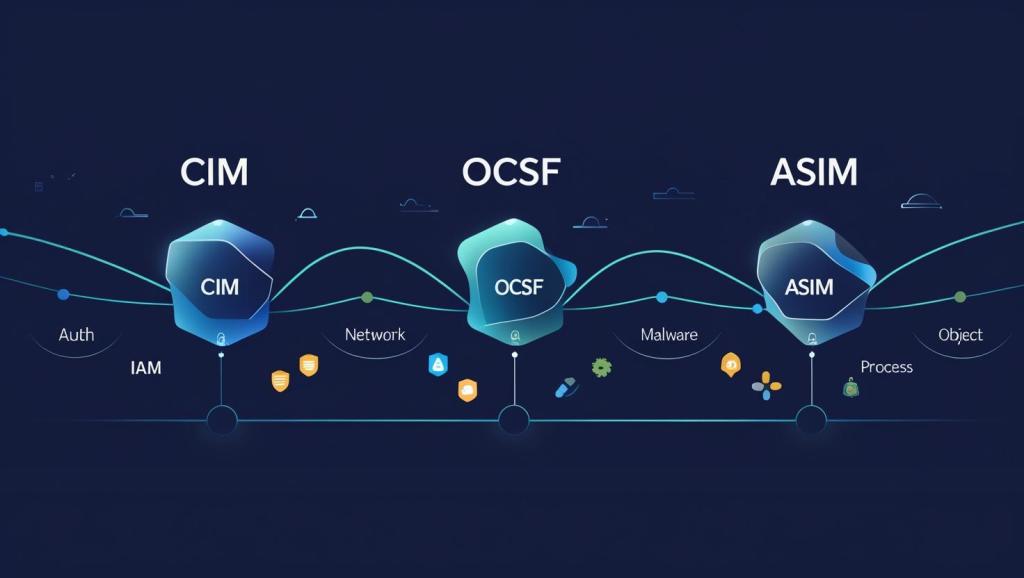

First, some standards. Best practices in security data modeling have crystallized around several key frameworks and methodologies that address the unique challenges of security data. I’ve listed the three big ones below along with their strengths, weaknesses, etc.

No standard is perfect. The right choice depends on your existing tooling, the diversity of your data sources, and how much you value vendor neutrality versus ecosystem depth. Treat these models as starting points, not final answers. Pick the one that fits most of your needs, adapt it where it falls short, and make sure your architecture can evolve if your choice stops working for you.

Architectural Choices

Standards give you a common language for your data, but they do not tell you how to store it, organize it, or move it through your systems. That is where architecture comes in. Even if you pick the “right” schema, the wrong architectural decisions can leave you with a brittle, slow, or siloed system.

In practice, the choice of a standard and the choice of architecture are linked. A vendor-centric model like CIM might push you toward certain toolchains. A vendor-neutral schema like OCSF can give you more freedom to design a hybrid architecture. ASIM might make sense if your governance model already leans heavily on Microsoft tools.

No matter what standard you start with, you still have to navigate the big tradeoffs that define how your security data platform works in the real world. Below are five key architectural decisions that have the biggest impact on scalability, performance, and adaptability.

- Relational vs. Graph

Relational databases are reliable, mature, and great for structured queries and compliance reporting. They struggle, though, with the many-to-many relationships common in security data, which often require expensive joins. Graph databases handle those relationships naturally and make things like attack path analysis far more efficient, but they require specialized skills and are not as strong for aggregation-heavy workloads. - Time-series vs. Event-Based

Time-series models are great for continuous measurements like authentication rates or network metrics, with built-in aggregation and downsampling. Event-based models capture irregular, discrete events with richer context, making them better for forensic reconstruction. Many teams now run hybrids with time-series for baselining and metrics and event-based for detailed investigation. - Centralized vs. Federated Governance

Centralized governance gives you consistent policy enforcement and unified visibility, which is great for compliance, but it can become a bottleneck. Federated governance lets teams move faster and tailor models to their needs, but risks fragmentation. Large organizations often mix the two: local autonomy for operations, centralized oversight for security and compliance. - Performance vs. Flexibility

If you need fast queries for SOC dashboards, you will lean toward pre-aggregated, columnar storage. If you want to explore new detections and threat hypotheses, you will want schema-on-read flexibility, even if it costs more compute time. Many mature teams adopt a Lambda-style approach that keeps both real-time and batch capabilities. - Storage Efficiency vs. Query Performance

Compressed formats and tiered storage save money but slow down complex queries. In-memory databases and materialized views make investigations fast but cost more. The right balance depends on your use case: compliance archives need efficiency, while real-time threat detection needs speed.

Your choice of standard sets the language for your data, but these architectural decisions determine how that data actually works for you. The most resilient security data platforms come from matching the two: picking a model that fits your environment, then making architecture choices that balance speed, flexibility, governance, and cost. That is why the final step is not chasing the “perfect” setup, but designing for scale, interoperability, and adaptability from the start.

Five Recommendations for Effective Security Data Modeling

If you take anything away from this blog, this is it. Here are my top recommendations:

- Start with clear use cases

Do not pick tools because they are popular or because a vendor says they are the future. Decide what problems you need to solve, then choose the standards and architecture that solve them best. - Mix and match architectures

Different data types have different needs. Graph databases are great for mapping relationships, time-series for metrics, and data lakes for long-term, flexible storage. Use the right tool for the right job. - Prioritize open standards

Interoperability is the best hedge against vendor lock-in. Even if you lean on a vendor ecosystem, align your data to open formats so you can plug in new tools or migrate without a full rebuild. - Design for scale from day one

Security data volumes grow fast. Build your pipelines, storage, and governance with that growth in mind so you are not forced into a costly re-architecture later. - Stay flexible

Threats evolve, and so should your data model. Avoid over-optimizing for a single use case or threat type. Keep room to adapt without breaking everything you have built.

Closing Thoughts

No standard or architecture will be perfect. Every choice will have gaps, tradeoffs, and moments where it slows you down. What’s important is to understand those imperfections, design around them, and keep adapting as threats and technology change. Treat standards as a baseline, use architecture to make them work for you, and build with the expectation that your needs will evolve.